Video

Summary

The goal of our project was to generate 3D structures using a generative adversial network(GAN). Our AI “Alaina” will parse the input text for keywords which will be used to identify the type of object being generated. After the type of object has been decided the appropriate pre-trained model will be loaded and the generative model is inputted with random noise. The output of the generative model will be a 3D matrix consisting of a probability for each value. The probabilities are rounded and the model is then rendered. 0’s will be interpretted as an empty space while 1’s will be interpretted as occupied space.

Approach

We started by using a dataset of 3D objects (chairs) in the Object File Format (.off). The format is used for representing a geometric figure by specifying the surface polygons of the model. The format of a .off file is fairly simple: a list of all vertices, followed by a list of faces with the corresponding vertices. The .off objects were then converted to voxels using our own Python scripts. The scripts work by determing whether a voxel lies on a surface of the mesh. First the dimensions of the 3D mesh are scaled to fit within a 30x30x30 grid.

The surfaces of the mesh are then converted into voxels by checking whether nearby points overlap with the given surface. We can check whether they overlap by calculating the normal vector of the surface, and projecting the possible points onto this vector. If the magnitude of the projected vector is with a certain threshold this means the point exists on the same plane as the surface.

However, to check whether the point actually exists within the bounds of the surface we then calculate the barycentric coordinates of that points in relation to the surface. If all of the barycentrics coordinates are positive this means the point is within the bounds of the surface.

Here is are the equations used in these calculations:

If both of these conditions are true then a voxel is placed at the points. The output of the script is a 3D matrix with dimensions of 30x30x30; the matrix is binary encoded, meaning that the entries that are 0 signify an empty block and the entries that are 1 signify a cube.

GANs operate by learn through supervised learning. mean they need an existing dataset to train on. GANs are made up of two networks, generative and discriminative. The 2 networks are in competition constantly trying to outsmart the other. While the generator learns to create models that fool the discriminator, the discriminator gets better at spotting fakes.

The generator model is composed of several convolutional layers. It takes in a random noise vector as input, and outputs a 3D 30x30x30 matrix. It uses a the Adam optimizer to update the models parameters. The Adam optimizer is a form of stochastic gradient descent. The loss rate of the model is calculated using binary cross entropy which is defined by this equation:

The discrimator model is composed of several transposed convolutional layers. It takes in a 30x30x30 matrix as input and output the probability of whether the input was fake. This model also use an Adam optimizer, as well as binary cross entropy to calculate loss.

Evaluation

In terms of qualitative evaluations, we would like to evaluate the generated structures by how seamlessly they pass the eye-test. We’d like to ask questions such as, ‘Does the structure look at all abnormal?’, ‘Does it resemble the desired item?’, or ‘Is the object structurally sound?’

As for quantitative evualuations we will look at the training time of model.

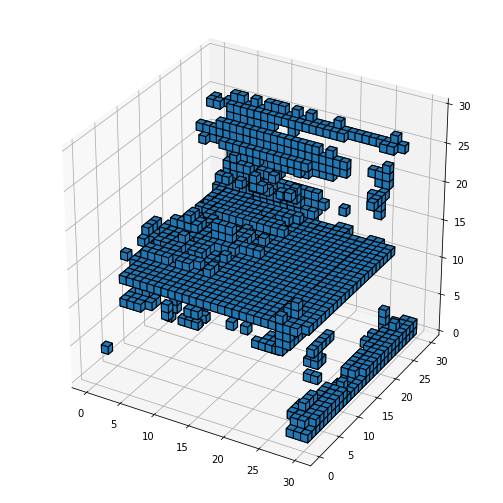

Here is an example of a chair we have generated with our current model:

From this image we can see that the chair is starting to take form, but it obviously missing some features, as well as generally being very noisy.

Remaining Goals and Challenges

Our goals are for the future include further refinining our training model to achieve better looking results, and expanding the versality of our model to handle multiple types of objects besides just chairs. Lastly if time permits we want to implement text commands, which will generate an item depends on a text input.

A possible challenge we may have is that there is too much variation in our dataset. In particular chairs have extreme variations in form. For example, some may be round, some are rectangular, some have legs, some have wheels, etc… This could potentially be overcome by increasing training time.